Underfitting and Overfitting in Machine Learning

Even though the machine is trained to solve a specific problem, There are still some limitations where machines perform far less than the expected outcome.

This is due to the problems like Underfitting and Overfitting. Let’s dive into our concept and grasp more info about these terms.

To Understand this much better lets consider an example of 3 types of situations possible for machine based on its accuracies in training and testing set. FYI, Training Accuracy defines how well the machine learnt the data used for training and similarly testing accuracy defines how well the machine is performing on unknown data.

Assume 3 machines — M1,M2,M3.

M1 — Let’s say this machine learns better and has a training accuracy of 99% but when we test it with the unknown data with which it is not trained on ( it means the data belonging to same class but not used to train). Unknown data doesn’t mean that if it is trained on cats and test on dogs. It means that training with one type of cat data and testing it on some different type of cat data to see how the model is generalizing.

According this we can say M1 has Training Accuracy of 99% and Testing Accuracy of 60% as it is not generalizing well.

Now Let’s see about Machine2 (M2)

M2 — Here this machine is performing better on training data and testing data as well. And the accuracy stands at 99% for training and 95% for testing. So we can say this model is generalizing well on training data and performing better on unknown data . That means training data pretty much covers everything and it is ready for production purpose.

Here comes the machine3 (M3)

M3 — It is the case where the machine is not performing well on the training data as well as testing data. That means it is not even learning the data well and as it didn’t learned well the prediction will be absolutely even less than the training accuracy.

So, After observing all the Machines the conclusion for each machine is

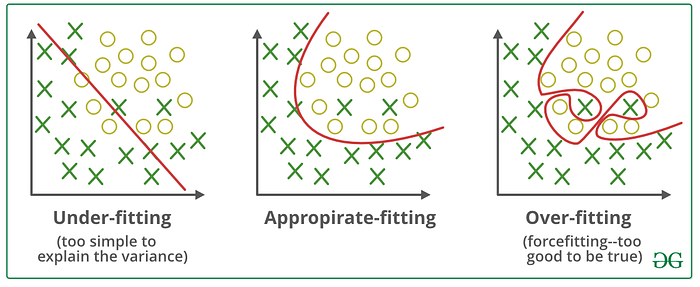

M1 — Mugs up (Learns) data well but unable to perform or apply the learned concept in a better fashion. This is known as Overfitting.

M2 — It learns the patterns and edges in the data in such a way to classify other unknown data as well (conceptual type of learning). This is known as Best fit.

M3 — In this case the machine is not even showing interest in training data. So it is not performing well on the test data. This is known as Underfitting.

You can have a look of three types of fitting data to get even more understanding. There are some more concepts like bias and variance linked to this and that is the topic for our next article.

Hope you liked it and got the essence of the title as i will make everything short and sweet to learn. If you want to learn more interesting topics follow me and stay tuned :) Thanks!!!! for reading.