Member-only story

Large Language Models

Hi :) !! ,

Thank you guys for following my articles and reading it through. Now iam going to start new articles based on concept of LLM. I will try to put the concept in the most easy way that everyone can understand. So lets dive into it.

LLM’s is the most heard term in recent days after the advancement in the field of generative ai. This concept has become so popular because of the evolution of so called Transformers.

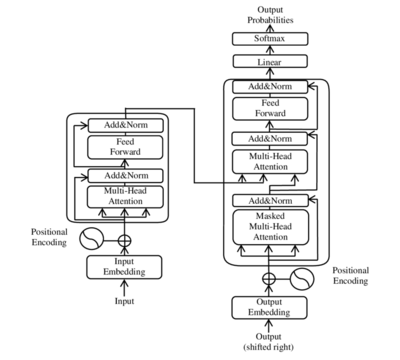

To be simple Transformers made the processing of natural language a lot more efficient by introducing Encoder-Decoder Architecture. Lets take an example for better understanding, Language Translation scenario — “A” knows English and “B” knows French, someone has to translate the text for both them to communicate. Here Encoder part of the transformer takes input Eng/French text and generates some enbeddings then the Decoder part knowing how encoder gives embeddings generates text in the other desired language. This is just an overall summary of how transformer works.

Evoluation of Transformers in the natural language tasks formed a stage for all context based model to develop further and improve the performance. Using Prompt Engineering which is nothing but training the language model with prompts (user inputs/questions) and responses there has been an increase in the accuracies of the Language models.